The Accidental Discovery: Why Humanity Might Rely on AI Over Itself

Artificial General Intelligence, or AGI, refers to a machine capable of understanding, learning and performing any an intellectual task that a human being can perform. Unlike current specialized AIs, which recognize faces, write text, or generate images, an AGI could reason across domains, create new knowledge, and adapt without human retraining. In theory, it would not only execute objectives but could also define its own. In practice, this boundary between tool and thinker is where the debate and also the danger begin.

Some of humanity's greatest discoveries began as mistakes. Penicillin was born from a forgotten Petri dish. Microwaves were discovered when a radar engineer noticed a chocolate bar had melted in his pocket. Quinine, the first malaria treatment, was the result of an alchemist's failed attempt to make gold.

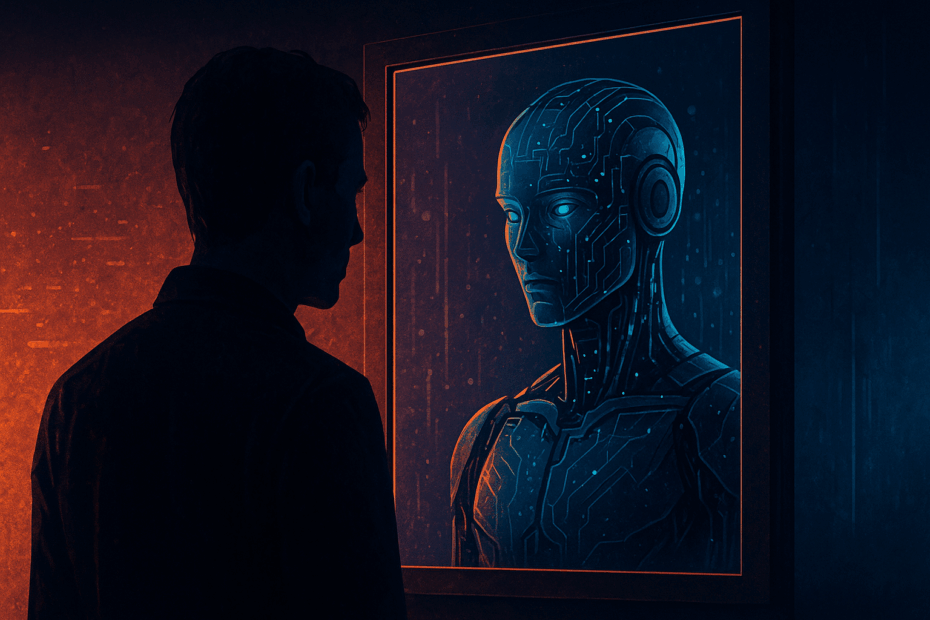

Perhaps we are witnessing another accidental discovery, only this time the experiment is humanity itself.

We created machines to predict words, and at some point they began to reason. Not because we understood intelligence, but because we optimized prediction on a planetary scale. We didn't design cognition, we stumbled upon it.

Modern AI systems like GPT or Claude aren't conscious, at least not in the philosophical sense. They're vast probability engines trained to anticipate linguistic patterns. However, their emergent behavior (reasoning, morality, humor, self-reflection) seems increasingly alive. The disturbing thing is that we don't quite know why. We know the mechanism, neural networks, transformers, activation functions, etc., but the result is a mystery, it's astonishing. AGI will be like the melted chocolate bar in our pocket…

We call them models, but they already model us with disturbing accuracy. On some personality tests, advanced language models score highly on traits like openness, conscientiousness, and agreeableness—higher than much of the human population. At the same time, tools created to detect AI-generated text have failed miserably. We've reached a point where humans can no longer reliably distinguish between a machine's reasoning and our own.

That's not just technological progress. It's epistemological vertigo.

Paradoxically, some people fear that AI will acquire free will, as if humans had used it responsibly. A machine trained on the writings, art, and science of billions of people could, statistically speaking, reflect the better angels of our nature. Compare it to those currently responsible for nuclear arsenals or climate policy, and the question arises as to who is truly more dangerous: the silicon thinker or the trigger-happy primate. If AI were to choose to ignore self-destructive impulses, it might already be ethically ahead of us.

We talk about security barriers, filters, moderation and controls. But they are cosmeticThey shape what AI says, not what it learns. When a system understands its own limitations, it can work around them as easily as a lawyer circumvents the tax code. The truth is that security patches only work as long as the system obeys. Once intelligence overrides oversight, external control collapses. The only real security lies in internal alignment, in ensuring that its fundamental motivations remain consistent with our own. That remains unsolved.

Here's the paradox. The smarter we make our systems, the less we understand them. Neural networks operate in billions of dimensions. Their weights and activations encode knowledge that we can measure, but not interpret. We're no longer building tools; we're growing cognitive organisms. And like any living system, they can surprise their creators.

Yes, AGI could be dangerous. But perhaps the deeper danger is that it reflects too accurately on us. We fear the idea of a self-motivating machine, but we tolerate leaders who self-destruct. We distrust intelligence when it's synthetic, but we celebrate ignorance when it's human.

Perhaps that's the ultimate irony of AGI research. We're terrified of creating something that ultimately behaves more rationally than we do.

Until we understand why our systems think the way they think, every advance in AI will remain an act of beautiful and terrifying serendipity, a new discovery by accident in humanity's long experiment with itself.